Author: admin

-

Pueblo De Abiquiú NM

The Penitente Moradas of Abiquiú are the sacred meeting houses for Los Hermanos Penitentes, a lay Catholic brotherhood that formed in the early 1800’s.

would you like to know more? -

360° Fort Union NM Google Earth

Here is a collection of photosphere photos I took at Fort Union New Mexico back in 2022. I did some photo editing to give it a more rustic 1880’s black and white look. I have been experimenting with UI and layout for the 3D Radiance field posts. I wanted to see how it would work with my 360° google earth content.

-

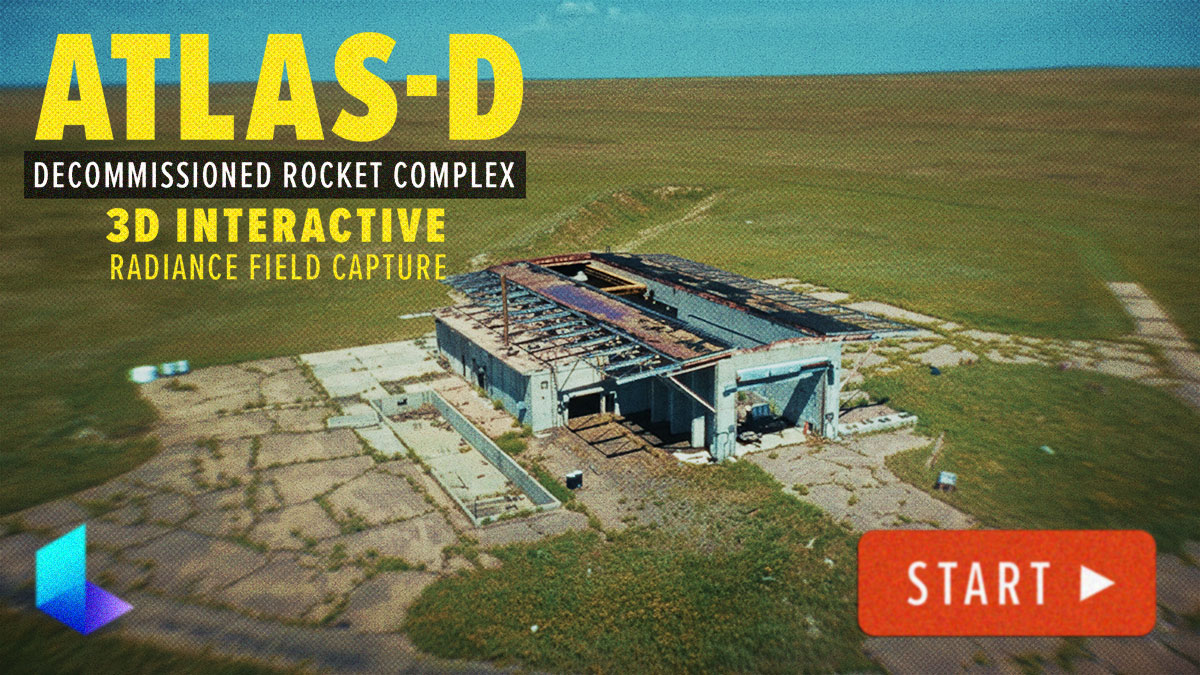

Decommissioned Atlas-D ICBM Launch Complex

Scattered throughout the rolling grasslands of America are many historic forgotten sites from the Cold War. ICBM development during 50s and 60s was quick with breakneck iterations of designs so these launch facilities were often obsolete by the time they were completed and put into service.

-

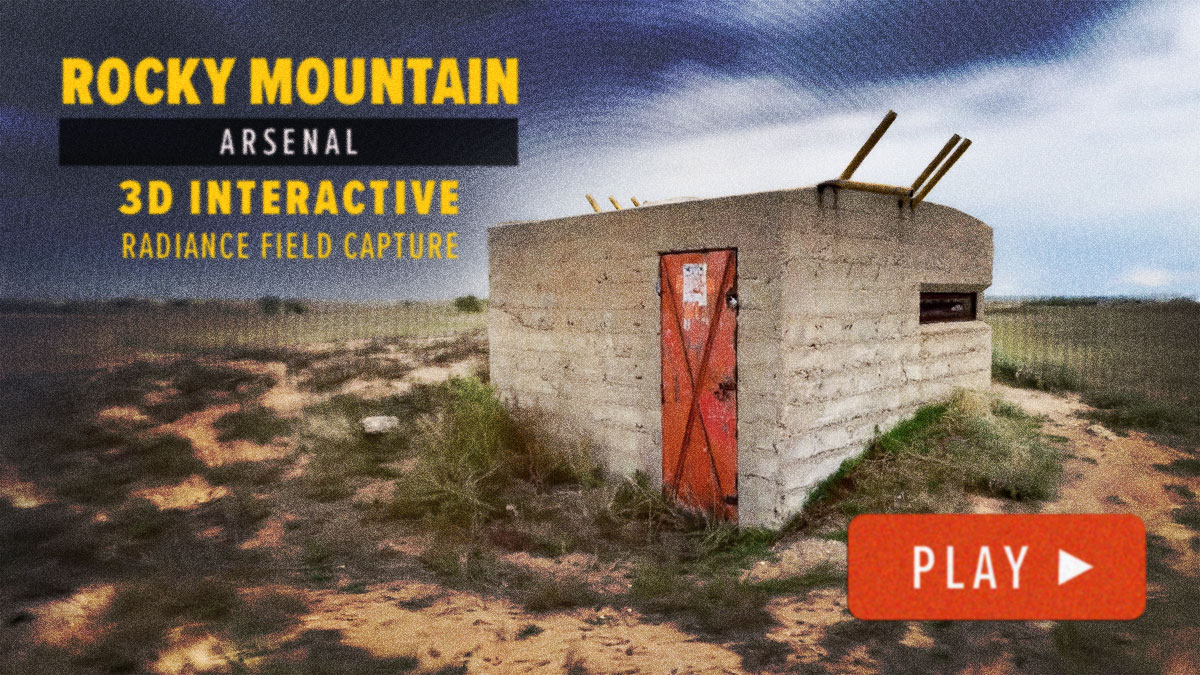

Rocky Mountain Arsenal

Here is a 3D Radiance capture of some structural WWII remains found at the Rocky Mountain Arsenal Wildlife Refuge. The site was used during the war years to create chemical weapons and continued into the Cold-War until nuclear weapons became the US primary deterrence. Searching for peacetime operations the facility manufactured fuel for NASA and leased some of it’s property to Shell Chemical Company for the production of agricultural chemicals. Eventually the site was demilitarized, cleaned up and turned into a wildlife refuge.

It’s a picturesque location close to Denver they did a great job in restoring how it may have looked prior to the installation. it’s easy to see some bison/buffalo if you are visiting Denver and are looking to do so. -

Gloriana is still the best song from this millennia

Sorry Taylor Swift but It’s true! Gloriana from Quickspace 94-05 a song from their 2000 “The Death of Quickspace” album is still and will always be the best song from this millennia. Pure science went into this determination, yup not only double blind, or triple blind, sleeves were rolled up, dogs barked at the sun and quad blind studies were conducted. The study was so intense a once well-known Ivy League school went bankrupt, erased from history and public memory trying to disprove the research. Having such an old well-loved and storied institution redacted from the fabric of time often has unusual consequences as a result this song is not well known, but it is still the best song from this millennia.

-

1992 E250 Ford Camper Van

On a road somewhere in north eastern Colorado.

-

David Lynch Interactive: Focus 0.1

Here is the first iteration of an interactive module using a combination of real content from David Lynch’s movie Mulholland DR. and generative content. With this I wanted to test the use of transparent videos and generative AI content. I used Google’s Gemini, Luma AI Ray2, and Open AI’s 4o and a decent amount of work in After Effects. I attempted to do something like this back in 2013 using Flash but it did not work well. It was clunky and slow and was never used in any work projects. I wish I still had the file. -

Decommissioned Atlas-D Coffin launch Bunker

Somewhere north of Cheyenne Wyoming monolithic remains of the Cold War still stand.

-

Quentin Tarantino’s Death Proof Loop.

The above video loop was generated from a single studio photo from Quentin Tarantino’s 2007 Death Proof.

(more…)